Curiosity Has Landed

They did it. The rover is safely on Mars and sent back some pictures.

Occasionally the US wins one.

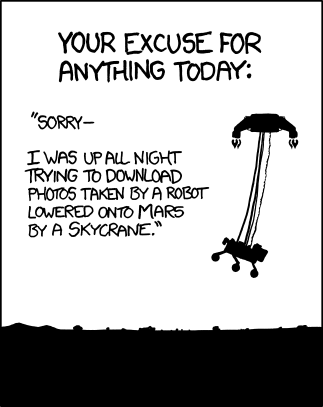

Update: I added the xkcd cartoon, and I would point out that a lot of people and groups not in the US are involved in this effort, including the Canadian Space Agency.

This is basic research, science. This is meant to be shared with everyone to advance everyone’s understanding of what’s happening. The most important discoveries from this mission, as Dr. John Grunsfeld, a physicist and former astronaut, pointed out during the JPL feed this morning, may be something that no one was even looking for because we didn’t even have the information necessary to pose the question.

15 comments

Just a little reminder of the days of our youth when Americans were the technological MOTU. Those people in mission control deserve their celebration!

As one of the administrators just said, “Let the science begin!”

There was a hell of a lot of science, and engineering, involved in getting Curiosity and two satellites in position just to send back the landing telemetry to Earth. Mars still has an 8 mission lead over Earth, with too many of those ‘own-goals’.

Oh, Happy Birthday, Steve.

It was definitely amazing that it worked. Remember that there is an 8 minute lag between here and Mars so there was no human in the loop, this was all done autonomously by the on-board computer systems of the various modules, and any of us who’ve ever written significant computer software know just how fragile a complex system can be if presented with inputs that were not foreseen by its programmers. Luckily no such inputs presented themselves…

Especially amazing was that they managed it despite various subsystems being designed and implemented by entirely different teams. I’m sure you’ve experienced the problems that comes with that. I just finished patching together a new system from bits and pieces that encompasses code that might have been written yesterday or might date back to 2002, and it was *not* an easy task to make all of this work together. Merely updating the Java release from 1.4 to 1.6 for the GUI caused a ridiculous number of things to go haywire (mostly window positioning relative to the center of the screen and foreground/background windows for dialogs and splash screens, both of which I had to reverse-engineer and fix in the GUI and which behave differently on Windows and Linux, so much for that “write once run anywhere” BS!), and that was the *easy* part…

Really amazing if you know that there is no way in hell of actually testing the systems in real time under real conditions. When they were announcing the variances and started at less that 300 meters and then got down to less than 10 meters before landing after traveling hundreds of millions kilometers it was ‘some damn fine shooting’. When there’s a quarter of an hour between when you see a problem and can actually do anything about it [round-trip time], precision is obviously pretty iffy.

Hell, I was thrilled when other programming teams actually passed the correct number and type of parameters to the code I was working on, because that wasn’t given, no matter what it said in the specifications.

Having watched my Dad and his crew work out problems with a guidance system using our boat on the bayou, I have a real world appreciation of what’s involved, and they could see and react to problems immediately. That they were controlling the boat using a rotary dial mechanism from a telephone amazed me.

“Standards !? We don’t need no stinkin’ standards! They inhibit our ability to add features that no one wants or needs!”

We must all differentiate our products for the sake of consumer choice … 😈

The pathetic thing is that both the Linux Java runtime and the Windows Java runtime were official Oracle Java runtimes. They *should* have acted identically. They didn’t.

Like I said, so much for that “write once, run everywhere” BS :(.

That was one of the things about the ‘cult of Java’ at Sun, they really pushed the consistency across platforms. I was ‘thrilled’ when Larry decided to buy them up and introduce Oracle ‘rules’ to another product line. I have a cousin who is an Oracle specialist, and she has spent years attending seminars and classes on new releases of the software. She made good money, but she wanted to ‘finally graduate’ and leave school behind. She managed to do it by taking a lower paying position with a medium-sized company that wasn’t interested in being ‘leading edge’, and is content to just stay at home and go to work every day knowing what the software does, and what it will do tomorrow.

Yeah, WORE is a nice concept … maybe someone should try it.

This particular code base dates back to 2002, it has special cases in it for Windows 95/98/ME, Windows NT, and Windows 2000, as well as Linux. It was written to the Java 1.4 spec and bundled with a Java 1.4 JVM. So even in 2002, Java wasn’t write-once/run everywhere, regardless of how zealous the “cult of Java” was at Sun.

My task was to make it run under a modern Java and on modern versions of Windows (it ran under “compatibility mode” in Windows Vista and up, but that’s going away for Windows 8). Some of the oddities I came across: Stupendously ugly fonts depending on the JRE installed on Linux (forget about OpenJDK, has to be the official Oracle one), my splash window was sent toFront() but then it stayed toFront() forever on Linux obscuring the login window that was supposed to appear in front of it so you could log in to the server, but if I didn’t put it toFront() then it opened in back of the browser window that initiated its execution, so I ended up having to manually put it toBack() on Linux — but not on Windows, because that would put it behind the browser window on Windows and was unnecessary on Windows anyhow because the login window opened in front of it,, and then some of the font spacings changed so some of my dialog boxes had to be made slightly larger to fit an IP address, and … well, I was rolling my eyes long before I came to that point. It didn’t take *that* much work to bring it up to 2012 standards, just a week or so to work through the entire user interface and work out all the glitches, but it was annoying that something supposedly “write once, run everywhere” actually wasn’t.

I wonder what the software for Curiousity was written in? Hrm…

It’s like all of the checking you have to do in CSS for various versions of IE, and other browsers – another standard that isn’t.

I’ve noticed the bad habit of Linux to bury some stuff and make me go looking for it. In most cases it is much better than Windows in handling multiple objects on the desktop, but then it will get a wild hair and the next thing opened will make something disappear to the back.

As for the spacecraft, the military uses ADA for embedded systems, like guidance, because of the multi-threading and matrix manipulation capabilities, but I would bet on some assembler called by C, with the bulk in one or another flavor of C. Other than the big data base projects, C is still the standard among the local contractors. I would be willing to bet that they are generating the final code using various ‘tools’ to speed things up, OOP-like shortcuts. It’s hard to imagine anything higher level than C that would be able to deal with all of the functions required, and they must have a huge library of tested and debugged modules.

Once they get the raw data back here, they are probably using everything that ever existed to process it, but the lander only has to collect it.

Someone actually told me what the processors on the Mars Lander were, and I forgot. It’s that whole CRSA thing that comes with aging, I guess :(. Anyhow, they’re fairly simple (but very low power consumption) processors that don’t have floating point, thus why NASA had to create their own image compression algorithms that didn’t require floating point.

I know what I would write an embedded system in, which would probably be “C” unless we’re talking something *really* tiny like one of those little PIC chips with 128 registers and 1K words of program memory in which case assembly is the only way to do it, but I don’t write software for spacecraft. If my programs crash it’s generally not a big deal, since a watchdog will kick it back to life fairly swiftly (not that our software does a lot of crashing — my infrastructure’s been up for six months of pretty heavy use now without even breathing hard, we’re eating our own dogfood and it’s tasting pretty good so far). If a program on the spacecraft dies at the wrong time, however, the thing crashes and you lose a billion dollar investment. Decidedly *not* my idea of something I trust to a language as easy to crash as “C”….

That’s why the military uses ADA, because it has strong internal checks and will not run at all if things aren’t right. It is great for embedded systems, but has no standards for I/O, which makes it pretty worthless for non-embeds.

I assume that they have more powerful processors for aggregating and transmitting the data, but most of the instrument recorders I dealt with were fairly modest 8-bit processors with the field units using solar cell – lithium battery power systems. They were all programmed with assembler, and most stored data on EEPROMs. The wired versions tended to use serial for data streams.

Lots of things have improved from those days, and with a nuclear battery they aren’t as limited as the solar cell versions, but they aren’t doing much that requires massive CPU capability.

Ya, the biggest improvement is that ARM chips that sip power and are dirt cheap are now widely available and are easily programmed in higher-level languages like Objective C (iDevice) or Java (Android). But for spacecraft use you want something more rugged than a chip designed to be put into disposable iDevices and GooglePhones. Plus they’ve been working on this lander for quite a number of years, and state of the art back then wasn’t what it is today.

Back when I was doing embedded stuff like front panels and such, it was virtually all PIC chips. These things have PROM, EEPROM, or flash memory for program memory, anywhere from 1024 words to 8k words of program memory , and a register file of anywhere from 128 to 1024 bytes of data memory. Program memory is generally 12 to 24 bits wide, and denote an operation and an address. There is an accumulator for loading a value, then you do an operation on the value, then you store the value — i.e., simple load/store for arithmetic. I/O registers are mapped into this memory space. Each instruction takes a single cycle to run, other than jmp instructions, which take two cycles to run (note that there is no branch instruction — just a ‘skip on condition’ instruction that’ll hop you over the next instruction — but since all instructions are a single word, that next instruction could be a jmp… or not). There is no interrupt timer — if you have multiple things that need doing, you need to count cycles then use a state machine in your code to switch to the next task upon exhausting your cycle count. You can only do programmed load-accumulator store-pc jumps to program words in the first 256 words of program memory since you only have 8-bit load/store instructions, but there is a “jump absolute” instruction that’ll jump to arbitrary places in program memory, so the first 256 words of program memory are generally a jump vector table (each instruction is one word, including the jump instruction, and execution starts at location 0, so basically you reserve the first 256 words of program memory as a jump table).

In short, those were devices that made the 6502 look fancy :). So that’s my experience with really small devices. Nowadays I’m up to my hips in Linux bio structures, block device schedulers, and other such esoteric things that I really would prefer not knowing about, but so it goes. Some days I pine for the days of writing a state machine that bit-banged 9600 baud RS232 over two PIO lines while also scanning the keypad and displaying the results to a four-line LCD panel display, but then I recall sitting there with a pad of graphing paper mapping out exactly how many cycles it would take to oversample things sufficiently for reasonable accuracy on receiving RS232, and the state machine that debounced the keypad and did repeat on it was also ridiculously tedious, and I’m glad that I have hardware and a scheduler to handle such things today. I suspect that the chips used in the Curiousity are a bit more powerful than that… but definitely not as powerful as the twin six-core Sandy Bridge Xeon processors in the prototype appliance on my desk at work, which are ridiculously overpowered for any purpose I can use them for but they actually cost *less* than the Nehalem processors that they’re replacing so… (shrug).

Note that this lander is using thermocouples to turn decay heat into electricity so we aren’t talking about a huge amount of power, and much of it has to be stored to use to power the electric motors on the wheels rather than being available for use by the processors. So don’t think that just because it has a nuclear battery that it has a huge amount of power available to it. It doesn’t.

It’s a lot of power compared to Spirit or Opportunity, but it wouldn’t handle my current motherboard, much less the hard drives, especially when you add in the power requirements of instruments like the one on the boom that uses a laser to heat samples for testing. Moving a ton around, even on Mars, requires a lot of power, as does the transmitter to send the data back to Earth. I would assume that neither of those devices is used when the rover is moving.

The real question is where are we going to get the technicians, engineers, and scientists to replace the people in that control room when they retire, because my interaction with people just out of school lately isn’t encouraging. That’s why I’m interested in the Raspberry PI. It might get kids interested in the basics again, and that’s what you need for these low-level devices.

Did a bit of checking. The power unit in the Curiosity puts out a whole 120 watts of power, expected to decay to 100 watts of power over the next 17 years rated lifespan of this particular power unit. Definitely better than a reasonable-sized solar array could handle at Mars’s distance from the Sun, but hardly a huge amount of power.

Regarding where we’re going to get the technicians, engineers, and scientists, the same place we currently get them,we’ll import them like we import cheap trinkets from China, which is why I am one of four(4) native-born Americans in my employer’s Engineering department, and two of us are, uhm, let’s say we’re closer to 50 than to anything else :). And the two younger ones are CP and basically have too many quirks and twitches to do anything else, since there isn’t much of a calling for, say, used car salesmen who twitch and shake, but there’s nothing wrong with their mind. Thing is, sooner or later the imported talent is going to go back home and set up in competition, while there’s a limited number of smart handicapped people to train as technicians, engineers, and scientists, so…

My current processor draws 95 watts [CPU/GPU combined on a chip] so we can forget that. They are definitely going to be stopping to use the instruments.

Hell, even the competent people graduating from good schools can’t get a job to log some experience, so WASF. Congress sold technology down the tubes when they jacked up the number of H1bs to please the CEOs looking for slave labor, and the country will pay the price.